Rectifier Neural Activation Function . Rectified linear units, compared to sigmoid. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. It is also known as the rectifier. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument:

from www.chegg.com

in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: It is also known as the rectifier. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified linear units, compared to sigmoid. 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on.

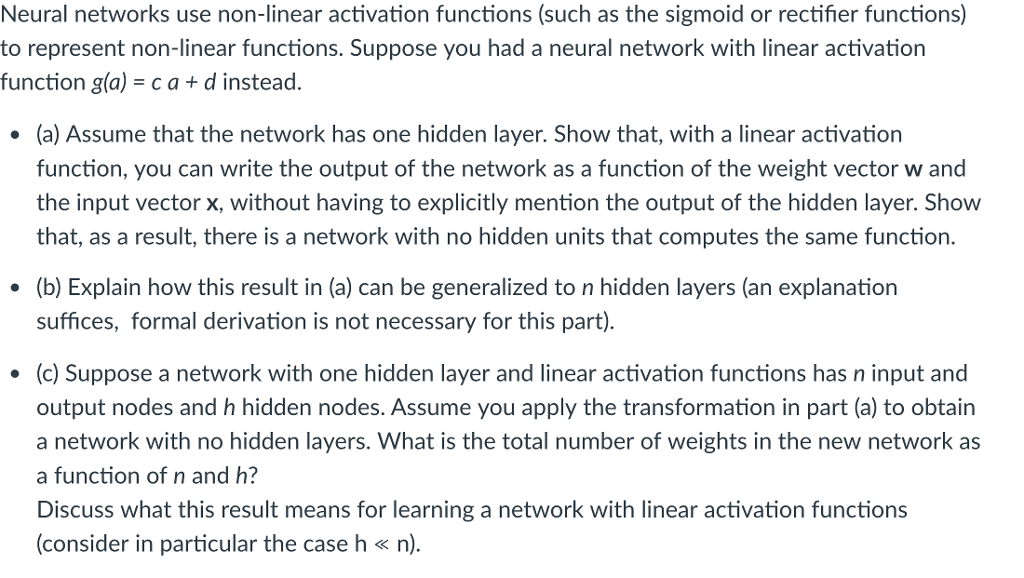

Neural networks use activation functions

Rectifier Neural Activation Function in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: Rectified linear units, compared to sigmoid. 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. It is also known as the rectifier.

From www.researchgate.net

The activation functions for all inner nodes are rectifiers, while the Rectifier Neural Activation Function Rectified linear units, compared to sigmoid. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. 15 rows the activation function of a node in an artificial neural. Rectifier Neural Activation Function.

From www.pngegg.com

Rectifier Activation function Artificial neural network Derivative Rectifier Neural Activation Function in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. Rectified linear units, compared to sigmoid. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. a rectifier activation function (also referred to as a rectified. Rectifier Neural Activation Function.

From www.numerade.com

SOLVED 4. The following image illustrates the topology of a Rectifier Neural Activation Function relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as:. Rectifier Neural Activation Function.

From www.chegg.com

Neural networks use activation functions Rectifier Neural Activation Function relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: Rectified linear units, compared to sigmoid. It is also known as the rectifier. in this article, you’ll. Rectifier Neural Activation Function.

From towardsdatascience.com

What is activation function ?. One of most important parts of neural Rectifier Neural Activation Function relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified linear units, compared to sigmoid. in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. “in the context of artificial neural networks, the rectifier is. Rectifier Neural Activation Function.

From deepai.org

Rectifier Neural Network with a DualPathway Architecture for Image Rectifier Neural Activation Function 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. It is also known as the rectifier. in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. a rectifier activation. Rectifier Neural Activation Function.

From www.semanticscholar.org

Rectifier (neural networks) Semantic Scholar Rectifier Neural Activation Function 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. Rectified linear units, compared to sigmoid. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: relu, or rectified linear unit,. Rectifier Neural Activation Function.

From slidetodoc.com

Ch 9 Introduction to Convolution Neural Networks CNN Rectifier Neural Activation Function in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. It is also known as the rectifier. Rectified linear units, compared to sigmoid. 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node. Rectifier Neural Activation Function.

From www.semanticscholar.org

Rectifier (neural networks) Semantic Scholar Rectifier Neural Activation Function It is also known as the rectifier. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. “in the context of artificial neural networks, the. Rectifier Neural Activation Function.

From www.ahajournals.org

Slow Delayed Rectifier Current Protects Ventricular Myocytes From Rectifier Neural Activation Function 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: Rectified linear units, compared to sigmoid. “in the context of artificial neural networks, the rectifier. Rectifier Neural Activation Function.

From www.researchgate.net

A neural network representation (the rectifier linear function in the Rectifier Neural Activation Function Rectified linear units, compared to sigmoid. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. It is also known as the rectifier. in this article, you’ll learn why relu is used in deep learning and the best practice to use it with keras and. 15 rows the. Rectifier Neural Activation Function.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Rectifier Neural Activation Function 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified linear units, compared to sigmoid. “in the context of artificial neural networks,. Rectifier Neural Activation Function.

From machinelearningmastery.com

A Gentle Introduction to the Rectified Linear Unit (ReLU Rectifier Neural Activation Function relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified linear units, compared to sigmoid. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: It is also known as the rectifier. 15 rows the activation. Rectifier Neural Activation Function.

From survival8.blogspot.com

survival8 Activation Functions in Neural Networks Rectifier Neural Activation Function It is also known as the rectifier. a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. “in the context of artificial neural networks, the rectifier is an activation function defined. Rectifier Neural Activation Function.

From slideplayer.com

Deep Learning Introduction ppt download Rectifier Neural Activation Function It is also known as the rectifier. Rectified linear units, compared to sigmoid. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. in this article, you’ll. Rectifier Neural Activation Function.

From imgbin.com

Activation Function Rectifier Artificial Neural Network Mathematics PNG Rectifier Neural Activation Function “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified linear units, compared to sigmoid. in this article, you’ll learn why relu is used in deep. Rectifier Neural Activation Function.

From www.researchgate.net

Activation functions tested. A Piecewise, rectifierbased activation Rectifier Neural Activation Function Rectified linear units, compared to sigmoid. It is also known as the rectifier. 15 rows the activation function of a node in an artificial neural network is a function that calculates the output of the node based on. relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. . Rectifier Neural Activation Function.

From survival8.blogspot.com

survival8 Activation Functions in Neural Networks Rectifier Neural Activation Function It is also known as the rectifier. “in the context of artificial neural networks, the rectifier is an activation function defined as the positive part of its argument: a rectifier activation function (also referred to as a rectified linear unit or relu) is defined as: Rectified linear units, compared to sigmoid. relu, or rectified linear unit, represents. Rectifier Neural Activation Function.